Modern vulnerability management systems routinely generate reports containing thousands or even tens of thousands of flagged issues. But not every “critical” vulnerability actually represents an equally urgent business risk.

This disconnect often leads security teams to waste time triaging low-priority items while missing real exposure, resulting in ever-growing backlogs and resources spent on vulnerabilities far less likely to be exploited. Security teams rightly question the transparency, reliability, and accountability of automated triage. Despite its promise, the idea of delegating risk decisions to AI understandably concerns. These are non-trivial issues. Who’s ultimately responsible if the AI deprioritizes a vulnerability that later gets exploited? Can the AI explain its logic in a way that supports compliance/audit requirements, or even make common sense?

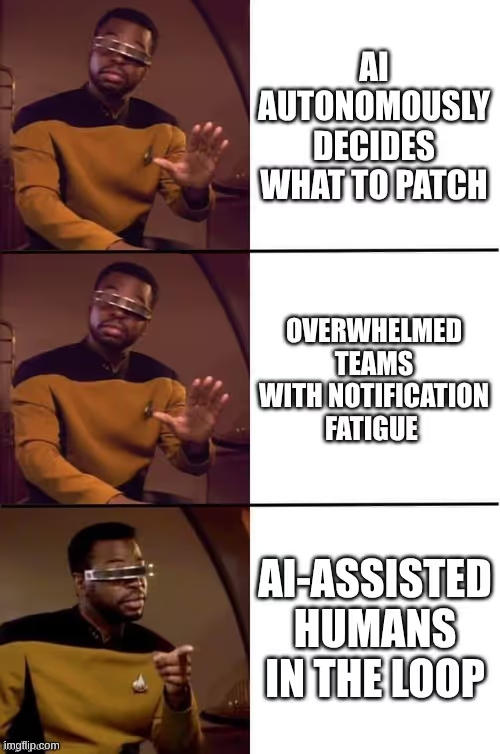

For agentic AI to earn trust, it must operate within a clearly defined human-accessible governance structure so that it doesn’t replace human oversight, but enhances it.

Why traditional vulnerability management fails

Despite decades of iteration, traditional vulnerability management remains plagued by inefficiencies and false priorities. The core issue is not a lack of detection; rather, a failure to contextualize risk. This section outlines the key failure modes of legacy approaches, from static scoring to cognitive overload, and shows why a shift toward contextual, AI-guided methods is needed.

Static scoring lacks strategic nuance

This is where agentic AI offers a meaningful shift. Unlike rule-based or assistive AI tools, agentic AI systems operate with a measure of autonomy. These systems can ingest, process, and contextualize environmental signals like assets’ mission-criticality, network architecture and exposure, and known exploit availability in order to make goal-directed decisions about what to act on first. However, the latter is rapidly becoming less of a distinguishing factor since it’s been not merely possible but relatively easy for even non-coders to vibe-code working exploits, based on LLMs simply reading the relevant CVSS posting.

From mechanical sorting to intelligent ranking

Traditional vulnerability management forces teams to mechanically sort through endless alerts using rigid, severity-based rules that fail to reflect real business risk. Using agentic AI in a vulnerability exposure management (VEM) context, however, enables intelligent risk ranking by helping to prioritize issues based on how threats actually interact with critical systems, data flows, operational roles, and even one’s network architecture itself.

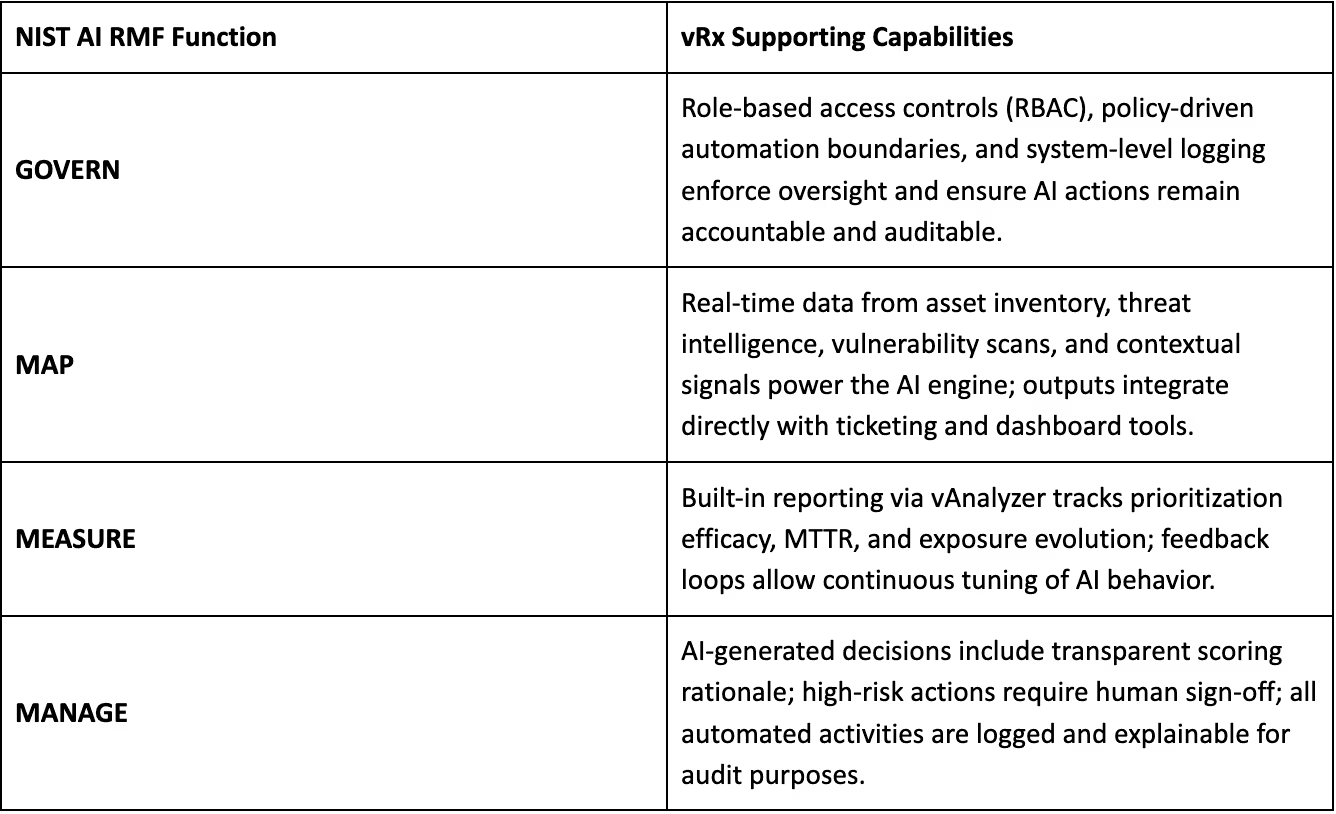

The NIST AI Risk Management Framework (AI RMF) provides exactly the structure needed to support this shift. By emphasizing principles like traceability, interpretability, and human accountability, it helps organizations deploy agentic AI safely and responsibly. Within this framework, AI becomes not a black box but a managed system with policies, thresholds, and review loops that align with organizational risk tolerance. The outcome isn’t simply faster triage; it’s a smarter, safer, and more strategic approach to exposure management.

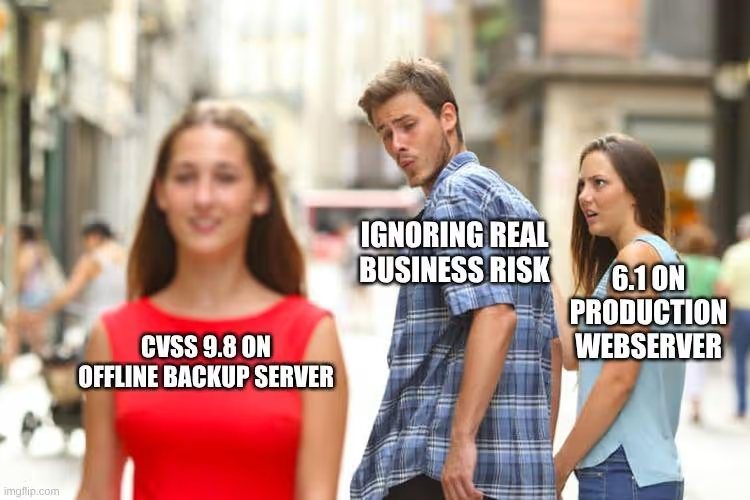

CVSS oversimplifies and misleads

The Common Vulnerability Scoring System (CVSS) was designed to provide a standardized baseline for evaluating vulnerabilities, but in practice, it often oversimplifies the risk landscape. CVSS does not account for business context, exploitability in the wild, or the actual exposure path of a vulnerability within a given environment. A high CVSS score on an internal backup server might be treated as a bigger priority than a moderate vulnerability in a publicly accessible web application simply because the scoring system lacks nuance.

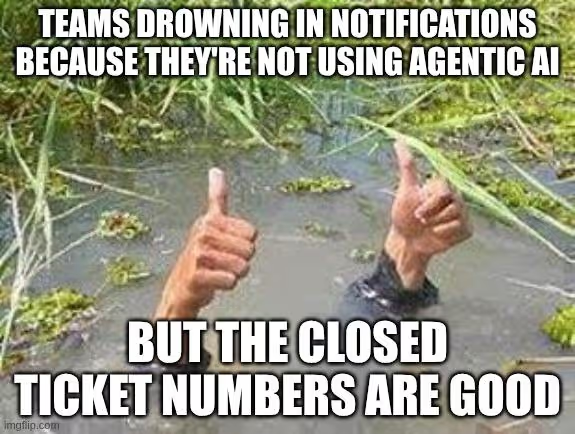

Alert fatigue blinds overworked teams

This context-blindness contributes to alert fatigue, one of the most pressing operational problems facing security teams today. When every scan returns an overwhelming stream of high-severity findings, teams quickly become desensitized. Important vulnerabilities may get buried in the noise, especially when analysts are forced to work through extensive backlogs without sufficient automation or context to guide them. The result is both burnout and blind spots: an exhausted team making risk decisions under pressure, with incomplete information.

Detection ≠ exposure insight

At the core of this problem is what we might call the “exposure gap.” Traditional scanners are very good at identifying known vulnerabilities, but they fall short of showing whether those vulnerabilities are truly exploitable. Attack surface management and exploit intelligence rarely feed into the same workflow as vulnerability detection, meaning the “scan result” becomes the de facto source of truth - even if it ignores how an attacker might move laterally, chain exploits, or bypass layers of defense. Simply knowing a vulnerability exists is not enough; exposure insight requires understanding the context in which that vulnerability exists.

Applying the NIST AI RMF to your vulnerability exposure management program

A successful agentic AI deployment begins with governance. The GOVERN function of the NIST AI RMF calls for clear policy definition around how AI systems operate within an organization. This includes defining risk tolerances (such as when AI is permitted to auto-prioritize or initiate a patch) and where boundaries require human review. Teams have to determine which environments (e.g., dev, staging, production) are eligible and safe for autonomous action, and which should require escalation or approval. Without governance, agentic AI risks becoming an unpredictable actor; with it, it becomes a valuable extension of the security team.

The next pillar, MAP, involves documenting the AI agent’s operating inputs and outputs. The first step is to inventory the data sources that supply the AI, such as vulnerability scanners, threat intelligence feeds, asset inventories, and configuration databases. The second step is to record the destinations where the AI sends its results, for example Jira tickets, ServiceNow tasks, dashboards, or direct patch orchestration pipelines. Maintaining this visibility ensures that every decision can be traced and any unexpected behavior can be investigated. If the AI deprioritizes a critical vulnerability, teams can identify which data source influenced that judgment and reconstruct the reasoning path that produced it.

Once governance and mapping are in place, teams must turn to MEASURE. Agentic AI in VEM should not be treated as a fire-and-forget tool. Its performance must be assessed through targeted metrics, such as:

- Prioritization accuracy: Are the vulnerabilities flagged by AI the ones later targeted by threat actors?

- Reduction in MTTR: Is the mean time to remediate improving for the issues identified as critical?

- Scenario testing results: Can the AI correctly prioritize simulated, multi-stage attack paths?

These metrics help teams refine AI behavior over time and adapt it to new threat patterns or organizational changes.

Finally, the MANAGE function emphasizes the need to keep humans in the loop, particularly for high-risk exposures. AI can propose a prioritized top 10 list each week, but it should not act on that list unilaterally. Human analysts should provide final review, especially for exposures involving production assets or sensitive data. Moreover, all AI-generated decisions should include justifications, e.g. “CVSS 7.2 + public exploit + internet-facing asset,” and those decisions must be logged. This ensures auditability, accountability, and trust.

Operationalizing agentic AI‑driven exposure management

Unlike CVSS-based systems that apply generic severity scores, vRx by Vicarius prioritizes vulnerabilities using a context-aware AI engine. It factors in exploitability, asset importance, network exposure, and business role to generate dynamic risk scores that reflect actual exposure as opposed to mere theoretical severity. This directly supports better-informed decisions and aligns with the MAP and MEASURE stages of the NIST AI RMF. vRx lays the groundwork for agentic AI through continuous discovery of assets across hybrid environments. By maintaining a live inventory of servers, endpoints, OS versions, and installed applications, it ensures that AI agents are able to operate with real-time context instead of outdated or partial data. This reduces false positives and prevents oversight of critical exposures.

Remediation in vRx is adaptive, offering three flexible paths: automated native patching for common OS environments, a scripting engine for configuration or logic-based fixes, and patchless protection that contains vulnerabilities when traditional patching isn’t viable.

Through REST APIs, vRx integrates seamlessly with SIEM, ITSM, and automation platforms. This allows AI-driven insights to trigger ticketing, update dashboards, and initiate responses without introducing manual overhead - making scaled remediation both fast and consistent.

For governance and reporting, vRx includes vAnalyzer: a BI-style dashboard and reporting suite that tracks exposure over time, shows risk reduction trends, and maps activity to compliance benchmarks like NIST CSF and ISO 27001. This bridges the communication gap between technical teams and risk owners.

Organizations using vRx report meaningful operational gains, including reduced MTTR, fewer tools to manage, and a lighter load on analysts. These outcomes show that agentic AI isn’t just conceptually sound – it’s practically implementable today.

From scanner output to strategic intelligence

Agentic AI, when deployed with the right guardrails, represents a leap forward in how we manage vulnerability exposure. Instead of drowning in noisy scan data, security teams gain a filtered, prioritized view of what actually matters - contextualized by business risk and attack surface visibility. By automating the repetitive and scaling the contextual, agentic AI repositions the security professional from patch jockey to strategic advisor. Teams can focus on securing the most critical assets, advising business stakeholders, and preparing for emerging threats instead of constantly playing catch-up.

The time to act is now. Waiting for regulatory clarity or industry consensus means falling behind. As threat volumes rise and compliance frameworks evolve, organizations that invest in structured, responsible AI adoption will be best positioned to respond. With frameworks like the NIST AI RMF and platforms like vRx already in place, the path to intelligent exposure management is clear and within reach.

Request a demo today to see how vRx can help your teams work smarter in no time.