Katie: Every organization, I mean, you see their security dashboards, right? They're just lighting up like Christmas trees alerts for everything. CVEs, misconfigurations, outdated libraries. But here's the thing, and this is where it gets really interesting for security teams. Finding those vulnerabilities, that's actually kind of the easy part now. We've got amazing scanning tools for that. Fixing them, that's where security gets real. That's where it moves from just knowing stuff to actually doing something. So today, we're taking a deep dive into vulnerability remediation. It's that absolutely crucial stage where our security shifts from just seeing a problem to actively solving it.

James: Exactly. And you hit on the critical point there. Attackers, they don't wait. They don't care about your internal patch cycles or committee approvals or waiting for that monthly change window. They just exploit. So unless you effectively close that loop, the one between seeing the alert and fixing the issue, Those alerts, they just stay alerts. They're not solutions. They don't actually reduce your risk. It's the difference between knowing your house has a broken window and actually boarding it up before the storm hits.

Katie: Right, so our mission today is really to equip you, our listener, with a crystal clear understanding of what vulnerability remediation truly is, why it's so notoriously challenging in practice, and also the best practices, especially things like automation, that modern teams, the really high performing ones, are using to make it work at scale. Think of this deep dive as maybe your shortcut, a way to understand how organizations move from, okay, we know there's a problem, to we've actually fixed it and really truly reduce their risk. We're going to unpack this whole process step-by-step, shine a light on the critical decisions, the actions involved, so you can walk away feeling well-informed, ready to connect the dots in your own world. All right, let's unpack this then. We hear vulnerability remediation, and for a lot of people, maybe it just sounds like fixing stuff, but what does that really mean for an organization? Beyond just that simple phrase,

James: Yeah, you're right. It's definitely more nuanced than just fixing stuff. At its core, vulnerability remediation is the systematic process. It's about identifying, then prioritizing, and then actively mitigating or eliminating security weaknesses. In systems, software, configurations, the key is doing it before they can be exploited by an attacker. And crucially, like you said, it's not just about applying a patch. It's really about making targeted, risk-aware decisions to reduce that exposure. Imagine like a doctor diagnosing an illness. They don't just say you're sick. They figure out exactly what it is, how bad it is, consider your overall health, and then prescribe a precise treatment plan. That's remediation.

Katie: Okay, so it's about being strategic, thinking ahead, understanding the whole picture before you jump in. But, you know, in an environment with thousands of alerts pouring in constantly, isn't there a risk that strategic just becomes another word for slow? Especially when the attackers are moving so fast.

James: That's a really perceptive challenge. And yeah, it absolutely highlights why remediation is so complex. You definitely need speed, but just running fast without knowing where you're going. That can break critical systems. This whole process, it lives inside a bigger thing we call the vulnerability management lifecycle. It starts with discovery, then assessment, prioritization, then remediation, followed by validation, and finally, reporting. Remediation is, without a doubt, the central pillar. If you don't have effective remediation, the whole cycle just It breaks down all that effort you put into discovery and assessment It's kind of wasted if attackers can still exploit that gap between knowing and fixing It's the point where knowledge either turns into actual security or it doesn't that makes perfect sense.

Katie: It's the action step So if it's this multi-step journey the central pillar, what are those critical stages you need to navigate? how do you get from just an alert about a potential problem to a confirmed verified solution and

James: Well, what's fascinating here is that the journey, it sounds logical on paper, right? But each step needs precision. It needs collaboration. It starts with step one, identification. This is all about visibility, getting eyes across your whole environment. Think about it. Security scanners are working constantly, detecting everything from outdated OS, maybe a misconfigured cloud permission, some unsupported software still hiding somewhere, or even those tricky supply chain exposures buried deep in your software. These findings, they often get mapped to known CVEs, those common vulnerabilities and exposures, or maybe they come from specific vendor advisories. The key takeaway from this first step is understanding what's vulnerable, where it is in your systems, and how it might matter based on its context. But, and this is important, this step doesn't yet tell you what to do about it. It's just finding the problems.

Katie: Right. And you mentioned earlier that finding vulnerabilities is almost the easy part now, but if you're an organization looking at, I don't know, 5,000 assets and maybe 30,000 vulnerabilities, how do you even begin to decide what to tackle first? That sounds absolutely monumental, which brings us squarely to step two, prioritization, I guess.

James: Absolutely, and you nail it. This is where most teams get stuck. Prioritization is where you have to move beyond just raw numbers and technical scores. You absolutely cannot just fix based on the highest CVS score alone. That's the common vulnerability scoring system, right? It gives a technical severity, but it's static. It doesn't tell the whole risk story. Sid, you have to adopt a risk-based approach. That means layering on dynamic context. You gotta ask, is there a known active exploit for this vulnerability right now? out in the wild. Things like CESA's KEV catalog, their known exploited vulnerabilities list, it's invaluable here. Then is the system itself business critical? You know, core operations depend on it. What about interdependencies? A flaw in a minor service could cripple a whole application. Is it internet facing? Easily reachable by attackers and are there already other controls in place? Maybe a firewall rule or an IPS that might reduce the immediate risk to give you a concrete example a Vulnerability with a let's say lower CVS score maybe a six, but there's an active ransomware exploit for it being used by criminals today That's way more urgent than a theoretical 9.8 CVE buried on some isolated test box nobody can reach. That real-world context, often from threat intelligence, that's what turns a technical score into a real risk assessment. The goal, really, is simple. Fix what matters most first. Maximize your risk reduction with the resources you actually have.

Katie: OK, that makes a lot more sense. Focus on the real danger. So once those critical priorities are set, how do teams actually translate that knowledge into action? It's not just knowing what needs fixing. It's the how, right? Which leads us to step three, fix or mitigate. What kind of fixes are we talking about? It's not always just one magic button, is it?

Katie: No, rarely just one button.

James: And yeah, that's part of the complexity. Remediation involves a whole spectrum of actions. Most commonly, sure, it's applying vendor patches, OS patches, browser updates, fixes for applications like databases or web servers, but it can also be changing configurations, maybe disabling weak ciphers on a server, restricting network access, tightening up password policies. In some cases, like with zero days where there's no official patch yet, or for those old legacy systems you just can't update, teams might deploy virtual patches. That means using external controls like a web application firewall rule or an IPS signature to block the exploit attempt without actually touching the vulnerable code itself. Sometimes the answer is replacing or upgrading unsupported software altogether. Get rid of it. Or if you really can't fix it right away, maybe you resort to segmentation or isolation. Basically walling off the vulnerable system to limit its blast radius, you know. And this brings up a crucial distinction, mitigation versus remediation. Mitigation is like putting a temporary barrier around a dangerous hole. It reduces risk until you can properly fix it. Remediation is actually filling in the hole. And it's also important to say, sometimes accepting a specific very low risk vulnerability might be a conscious business decision. But that needs to be an active documented choice, not just, oh, well, it's in the backlog.

Katie: Gotcha. So apply the patch, change the config, maybe isolate it, or even accept the risk consciously. Okay, many organizations might breathe a sigh of relief then. The fixes apply, the mitigation is in place. But is that really the end of the story? What's the critical step that maybe gets overlooked? What happens if you do just check that box and move on?

Katie: Not quite. And that brings us to step four, validation and documentation. This step gets skipped or rushed way too often and it creates this massive blind spot, verifying that the fix actually worked as intended. That's just as important as applying in the first place. Validation means things like rescanning the asset. Confirm the vulnerability is truly gone, not just patched or resolved. Did the fix introduce any new problems? You need to check that too. Then comes documentation. Logging the fix in your ticketing system, detailing the timelines, who did what, what actions were taken, any approvals. And you also measure key things like mean time to remediate your MTTR. This isn't just for the auditors, though it helps there too. It's about building a system you can trust, understanding your own efficiency, having a clear record if, heaven forbid, there's an incident later. Without validation, you're basically operating on a guess. And with that documentation, you can't learn, you can't improve, and you can't prove anything.

Katie: That whole process laid out like that, it sounds so logical, almost like a, I don't know, a well-oiled machine on paper. But our source material really hammers home that in reality, it's incredibly difficult to implement effectively. So what are the big obstacles teams run into? What's the reality check here?

James: Yeah, this is exactly where theory hits the road, and those friction points become, well, painfully clear. There are about six common challenges that consistently trip up organizations, and they often make each other worse. First, you've got patch overload, just sheer volume. We're talking thousands, often tens of thousands of vulnerabilities across a typical enterprise. Critical becomes this constantly moving target, and security teams just don't have the bandwidth, the people power to handle it all manually. It leads straight to alert fatigue. You know, everything seems urgent. So effectively, nothing is.

Katie: I can totally see that. Just drowning in alerts.

James: Exactly. Second, you run smack into change management bottlenecks. Applying fixes, especially on production systems, that needs approvals, testing, off and downtime. In many places, patching a critical server involves more bureaucracy, takes longer than deploying brand new features. Change windows are infrequent, tightly scheduled, and you always need complex rollback plans. It just adds tons of friction and delay to every single fix.

Katie: Wow, yeah, patching is harder than new stuff. That's counterintuitive.

James: It often is. Third, a really pervasive problem is fragmented tooling. Imagine trying to build a house with 10 different kinds of hammers, and none of them are quite right. Teams use one tool for scanning, another for patching, maybe scripts for some things, a separate ticketing system, different asset management. It creates data silos. You end up doing manual gymnastics just to get information from one system to another. It slows everything down to the pace of the slowest manual step. It just doesn't scale. Yeah, at all.

Katie: Yeah, it sounds like everyone's running a different race. Even if they're supposed to be on the same team, that friction must be immense.

James: Immense is a good word for it. Then number four, there's the big issue of legacy and unpatchable systems. So many companies still rely on old hardware or software that's end of life, vendor doesn't support it anymore, or you simply can't update it without breaking some critical business process that depends on it. So teams are stuck. Do they try to isolate these systems, deploy those virtual patches we talked about, or just accept the risk? which nobody really wants to do.

Katie: That's a tough spot.

James: Definitely. Fifth, a really common organizational hurdle. Lack of clear ownership. A vulnerability pops up. Who owns the fix? Is it IT's job? Security's job? What about flaws in third party apps managed by vendors? This ambiguity, it leads to finger pointing, delays. Vulnerabilities just fall through the cracks. It's that classic hot potato game, but with serious security risks.

Katie: Nobody wants to catch it.

James: Right. And finally, number six, there's huge compliance pressure. Security teams are always striving to meet external patching deadlines. Think IPAW, PCI DSS, ISO standards. But the business units, they often resist the downtime or the resources needed for these fixes. We can't take that system down now. So it's this constant difficult balancing act. Security mandates versus keeping the business running smoothly. It's a tension you see everywhere.

Katie: Wow, it really paints a vivid picture, doesn't it? A complex balancing act, like you said. So many moving parts, and any one of them can jam up the works, stall progress, and leave organizations exposed. So given all these really significant challenges, how do successful organizations actually manage to make remediation work? Effectively, I mean. What are the best practices that actually cut through the noise?

James: It's true. The challenges are definitely substantial, but successful remediation programs, they are possible and they tend to share some common traits. They're consistent, they're transparent, and they are ruthlessly prioritized. They don't try to boil the ocean, you know, they focus on key best practices. The absolute first one is to truly embrace risk-based prioritization. This is that fundamental shift away from patch everything, which is just unsustainable. to patch what matters most, continuously evaluating vulnerabilities based on, yes, exploitability, but also the business value of the asset and its exposure. Are attackers actively targeting this? How bad would it be if this got hit? That ensures you're putting your limited resources where they have the biggest impact on reducing real risk.

Katie: OK, so focus, focus, focus on the real risk.

James: Precisely. Second, you absolutely must define clear roles in ownership. Security teams, IT teams, application owners. Everyone needs clear service level agreements or SLAs. Explicit owners identified for remediation by system, by application, maybe by business unit. Everyone needs to know exactly who is responsible for fixing what and by when. No more ambiguity, no more hot potato.

Katie: Right. Clarity and accountability. Makes sense.

James: Third, successful teams align with IT and change windows. Security can't just operate in a vacuum, throwing demands over the wall. Mediation plans have to be integrated into existing IT operations, scheduled maintenance windows. This builds collaboration instead of conflict. It ensures fixes can actually get deployed without causing chaos.

Katie: So it's about partnership, not just enforcement. That sounds like a much stronger operational backbone.

James: Absolutely. Then you always, always test, roll out, and roll back. Don't just push patches out blindly and hope for the best. Use phased deployments. Start small. Test things thoroughly in staging or test environments first. And crucially, always have a documented, tested rollback plan in case something goes sideways. This builds confidence, it reduces that fear of breaking production, which is honestly a huge reason things get delayed.

Katie: Yeah, nobody wants to be the one who took down the payroll system with a patch.

James: Exactly. Fifth, and this is where we start talking about really speeding things up, automate where you can. Free up your smart, skilled people from doing the same repetitive, low-value tasks over and over. If a task is repeatable, predictable, scriptable, it's probably a good candidate for automation.

Katie: Okay, let the machines do the grunt work.

James: Pretty much. And finally, track and report relentlessly. Monitor your mean time to remediate MTTR. Track the percentage of your critical vulnerabilities you're resolving within your defined SLAs. Look for patterns. Identify recurring CVEs or systemic bottlenecks in certain teams or systems. What gets measured gets managed, right? This data is absolutely essential for seeing where you're improving, where you're stuck, and for demonstrating the actual value of your security efforts.

Katie: You mentioned automation quite a bit there and, I mean, given all those challenges we just talked about, the sheer volume, the complexity, how essential is automation today? Is it really a game changer or is it still more of a, you know, nice to have luxury for big companies?

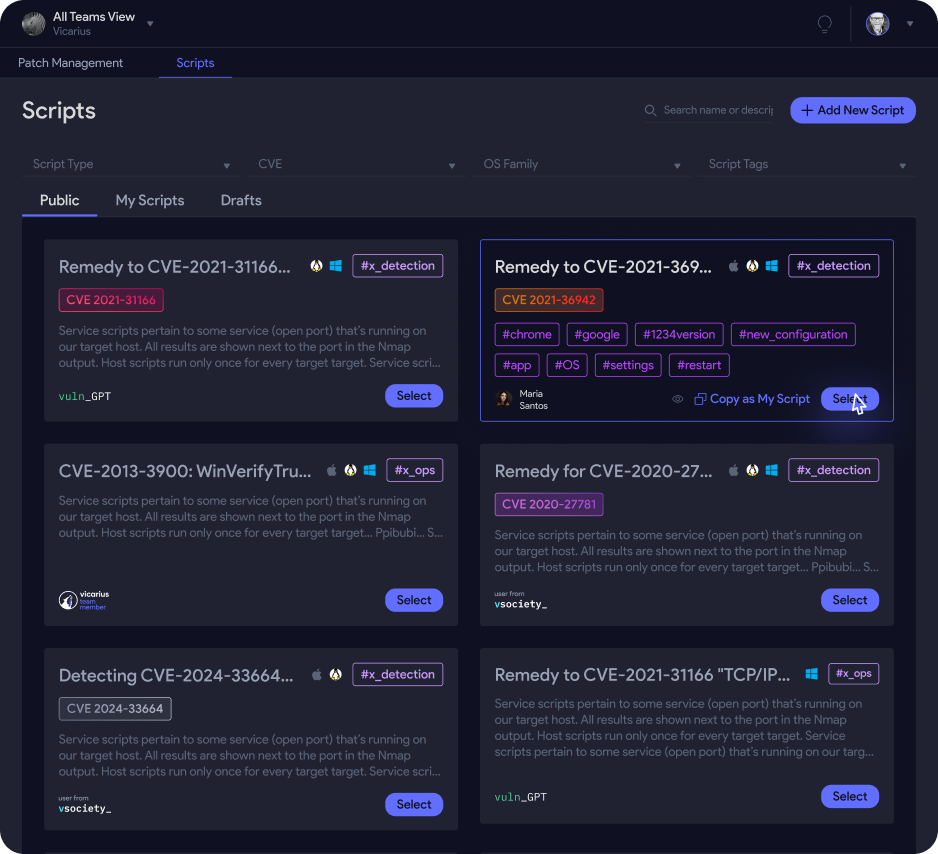

James: Oh, automation is absolutely no longer a nice to have. It's an absolute necessity. Manual remediation, it just cannot keep pace. Not with today's threat velocity, not with the sheer volume of vulnerabilities being discovered every single day. The security landscape changes hourly, not monthly. Human teams doing this manually are constantly playing catch-up, leads to burnout, and the backlog just keeps growing. This is precisely where platforms like Vicarious VRX, for example, are really making automated intelligent remediation a reality. They tackle the problem at scale. They can patch across all sorts of different operating systems, thousands of third-party applications, essentially automating what used to be incredibly manual, incredibly time-consuming work. They can also run script-based fixes for commonest configurations, fixing whole classes of vulnerabilities with precision automatically.

Katie: That sounds really powerful for just reducing that manual burden, that toil. But what about those tricky situations you mentioned earlier, those legacy systems, or zero days where maybe there isn't even a traditional patch available?

Katie: And that's where the truly advanced automation platforms really shine. They can apply what's called patchless protection when there isn't a conventional vendor patch. This means using techniques like memory-level shielding, actively defending against exploit attempts right inside the application's memory space. It's like vaccinating the application against specific attacks, even without needing a traditional patch or even a system reboot sometimes. It's a layer of defense that works even when a normal fix isn't an option. And beyond just the individual fixes, these systems automate policy-based remediation. You set the rules based on factors like, is it actively exploited? What's its risk score? What kind of asset is it? Compliance requirements. And then the system carries out the actions based on your policy. And every single action taken is meticulously tracked. You get a full audit trail for compliance, a clear record for analysis,

Katie: So it's like setting up intelligent guardrails, defining the rules of engagement, and then letting the system take targeted immediate action. It eliminates delays, reduces human error, and even provides protection where maybe no traditional patch exists. That really sounds like a strategic shift. empowering security teams to be proactive, not just reactive firefighters.

James: Exactly. You know, analysts like Gartner, they call this the future of security operations. But honestly, we think it's rapidly becoming the new normal. It's about turning remediation from this constant reactive stressful exercise into a proactive automated security control. It lets organizations finally get ahead of the attackers. It transforms security from just an abstract concept on a dashboard into tangible, measurable protection in the real world.

Katie: Okay, so for organizations that are adopting these more sophisticated practices, these technologies, how do they really know they're actually improving? How do they measure success? What are the key metrics that truly matter in this critical area?

James: That's a great question. Tracking the right metrics is absolutely crucial, both for visibility, for accountability, and frankly, for demonstrating value back to the business. First, the mean time to remediate, MTTR. That's paramount. It tells you on average How long it takes your organization to fix a vulnerability once it's discovered. A decreasing MTTR. That's a huge signal of improvement in your efficiency and your overall posture.

Katie: Okay, speed matters.

James: Speed definitely matters. Then there's the percentage of critical vulnerabilities resolved within SLA. This tracks how well you're sticking to your own promises, your service level agreements, for fixing the high priority stuff on time. Are you tackling the biggest risks effectively? You also want to monitor things like the top 10 recurring CVEs by asset group. This isn't just about individual fixes. It highlights systemic problems, maybe persistent misconfigurations, maybe poor software choices that keep introducing the same risks over and over. It helps you find and fix the root causes, not just whack the moles.

Katie: Ah, looking for those patterns. Smart.

James: And perhaps the most telling metric, especially for proactive security, is the time to patch versus time to exploit. We often call it the TTP-TTE gap. This metric compares your organization's average time to patch a specific vulnerability against the average time it takes attackers to weaponize and start exploiting that same vulnerability out in the wild. It's really the ultimate measure. Are you winning the race against the attackers? If you're time to patch TTPs consistently shorter than their time to exploit TTE, you're proactively shutting down attack vectors before they can be used against you. That's really the gold standard for vulnerability remediation.

Katie: Wow, those numbers, they really do tell the story, don't they? Where you're improving, where maybe you're still getting stuck allows for that continuous refinement, that clear understanding of how effective your security really is. We've covered a lot of ground today. From the basic definition of vulnerability remediation through that intricate dance of prioritization, the, let's be honest, often frustrating real-world challenges, and then the really game-changing power of automation. What's the main takeaway you hope our listener walks away with from this deep dive?

James: You know, it's so easy to get lost in the dashboards, the endless detections, the sheer volume of alerts. It can feel overwhelming. But ultimately, security isn't just about knowing what's vulnerable. That's important, but it's not enough. It's really about doing something about it. Vulnerability remediation is where that action happens. It's how organizations translate all those security insights into actual, tangible risk reduction. It's how you meet compliance obligations with confidence. And crucially, it's how you win back incredibly valuable time for your security teams, who are often stretched way too thin. Remediation, effective remediation, is the true measure of a robust working security program.

Katie: Yeah, well said. The source material leaves us with this really powerful directive. Don't just scan. Don't just triage. Remediate with intent, with speed and with confidence. And, you know, I think that applies to so many areas beyond just cybersecurity, right? Where in your own life or your own work, are you maybe getting stuck in that identifying problems phase, but not quite making it to remediating them with purpose, with speed? What's maybe one small step you can take towards action this week? We really hope this deep dive has given you some valuable insights to think about. you