AI is being deployed faster than it’s being secured. Generative AI has exploded into production (95% of US companies now use it) , yet security and privacy have quickly risen as top concerns slowing adoption. In my experience as an R&D leader working with Fortune 1000 CISOs, many organizations can’t even define what their “AI infrastructure” fully includes. And that’s a problem. I’ve seen a dangerous trend: GenAI apps are being built on top of fragile, fragmented, and often insecure foundations. We’re rushing to implement chatbots and AI services, while leaving fundamental gaps in defenses. As someone leading R&D at a cybersecurity firm, I can tell you bluntly that if you're deploying AI without securing its pipeline, you’re inviting your next breach.

What Is AI Infrastructure?

When we say AI infrastructure, what do we mean? It’s more than just the AI model. It’s a full stack of components that together enable AI capabilities and each layer is a unique attack surface:

- Foundation models & compute – The pretrained model (e.g., LLMs) and the compute stack (GPUs, servers) used for training, fine-tuning, and deployment.

- GenAI apps & interfaces – User-facing layers like chatbots, plugins, or web/mobile apps that deliver AI and handle prompt orchestration.

- Training & data pipelines – Data ingestion and processing flows (ETL, data lakes, feature stores) that prepare and feed data into models.

- RAG components – Retrieval-Augmented Generation stacks: vector DBs, document stores, and embedding pipelines that integrate external knowledge into AI.

- Inference APIs & endpoints – Model-serving APIs that expose predictions; critical access points that require strong controls.

- Orchestration & OSS libraries – Frameworks and open-source tools (e.g., LangChain) that stitch the AI pipeline together and form part of the supply chain.

AI infrastructure” spans from the low-level foundation to the high-level application. And every layer expands your attack surface . Understanding these layers isn’t academic, it's the first step in knowing where you’re exposed.

Real-World Breach Examples

This isn’t theoretical. We’ve already seen attacks on AI systems that exploit these new surfaces:

- Prompt Injection on Bing Chat (2023) One day after Microsoft launched GPT-4-powered Bing Chat, a Stanford student used prompt injection to reveal its hidden system instructions. By asking the model to ignore previous directives, the chatbot exposed internal prompts, policies, and its code name (“Sydney”). This highlighted how LLM interfaces can be manipulated to bypass safeguards and leak sensitive data.

- Leaked OpenAI API Keys in the Wild - In 2023, researchers found over 50,000 OpenAI API keys exposed on GitHub. Attackers used them to hijack GPT-4 access, racking up costs for victims, one key with a $150K limit was shared publicly. This underscores poor access control: hardcoded secrets, no hygiene, and zero monitoring until damage is done.

- Poisoned Models on Hugging Face - This year, researchers found malicious ML models on Hugging Face that triggered reverse shells via unsafe Pickle files. These backdoored models evaded detection and remained publicly available, highlighting the classic OSS trust issue. Pretrained models are code; without validation, a few malicious weights can silently compromise your system.

What’s the common theme? Insecure defaults and lack of oversight. Whether it’s an AI prompt with no access controls, credentials left unprotected, or blind trust in third-party models, these breaches show how AI tech will be abused like any other tech if we’re not proactive. There were no magic AI-specific hacks needed; attackers exploited weak spots (just as they do in web apps or cloud services).

Worst-Case Scenarios

Let’s paint the picture of what could happen in an enterprise if AI infrastructure is compromised. These are the nightmare scenarios keeping security leaders up at night:

- Model Extraction – Attackers can query your model’s API to replicate it, stealing IP and probing for vulnerabilities offline. This enables undetectable exploit development and loss of competitive edge.

- Training Data Poisoning – Subtle manipulations to training data can embed hidden triggers, leading to biased or malicious outputs. These backdoors often go unnoticed until activated in production.

- RAG Compromise – Poisoned documents in vector databases can distort model responses. Even a few rogue files can make AI confidently serve misinformation, posing serious reputational and compliance risks.

- Inference Endpoint Abuse – Unprotected endpoints can be spammed, exploited for data leakage, or manipulated via prompt injection. Without proper logging or filters, misuse may go undetected until damage occurs.

- Supply Chain Compromise – Malicious code, models, or dependencies in your pipeline can introduce threats before deployment. If integrity isn’t verified, you may ship vulnerabilities without knowing it.

In large enterprises, these scenarios might not be noticed immediately. AI systems are new territory for many security operations teams; the monitoring and detections are immature. The result? An AI incident (data leakage, rogue outputs, manipulated analytics) could materialize as a serious business impact (privacy violation, financial loss, reputational damage) by the time anyone realizes something’s wrong.

Best Practices by Layer

So how do we defend this new attack surface? It requires a layered approach, mirroring the AI stack itself. Here are pragmatic best practices at each layer of AI infrastructure:

- Model & Core Engine – Restrict access with strong auth and RBAC. Monitor queries for abuse, rate-limit to prevent model scraping, and red-team regularly to uncover weaknesses and patch them.

- Data Pipelines – Use trusted sources, validate data, and track lineage. Isolate sensitive training workflows, sanitize inputs, and monitor for anomalies to prevent poisoning or leakage.

- Inference APIs & Endpoints – Enforce zero-trust: secure endpoints, limit usage, log metadata, and filter responses. Monitor for misuse and set tight access policies for tokens and network exposure.

- RAG (Retrieval-Augmented Generation) Knowledge Stores – Sanitize external data, control access to vector DBs, and monitor retrieval patterns. Encrypt stores, apply least privilege, and regularly audit indexed content for integrity.

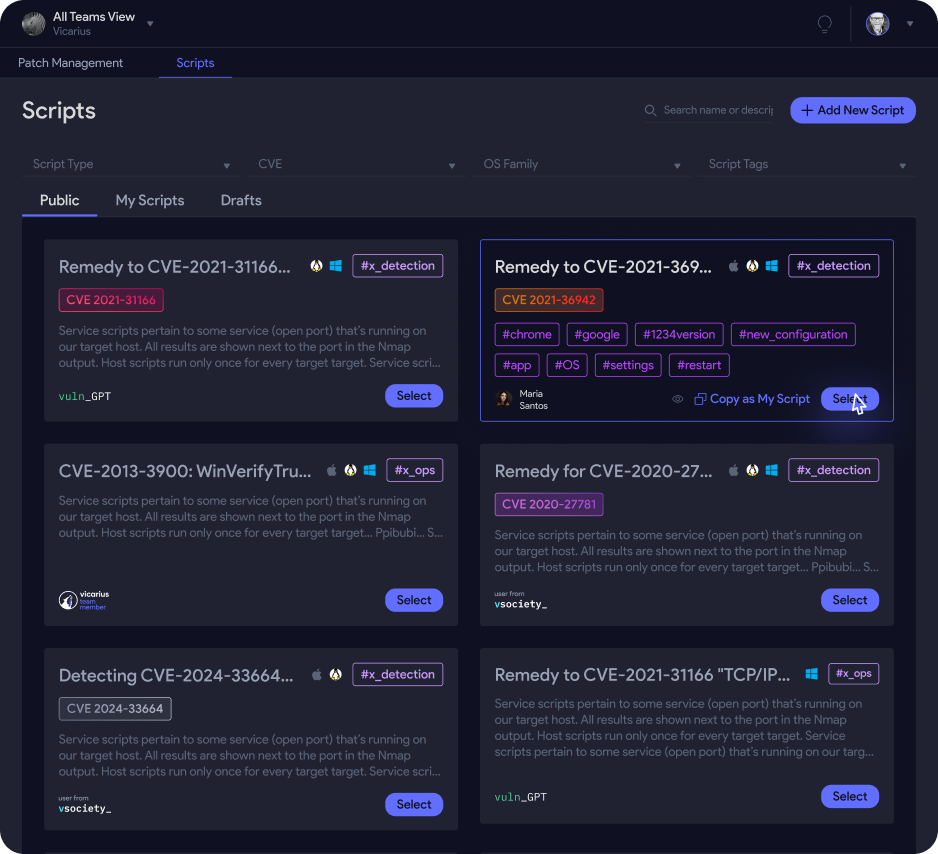

- Supply Chain & OSS – Maintain an SBOM, use signed packages, and verify model integrity. Scan dependencies for CVEs, monitor OSS changes, and sandbox or validate models before use.

- Orchestration & CI/CD – Isolate AI pipelines, enforce RBAC and approvals, and treat prompts as code. Apply standard security reviews, testing, and controls throughout the model deployment lifecycle.

Final Thoughts

Securing AI isn’t just about prompt safety, it’s an architectural challenge. CISOs must treat AI pipelines like core production systems, with full visibility, ownership, and coordination across security, IT, and data teams. AI is evolving fast, and reactive patching won’t keep up. Preemptive, built-in security is the only path forward.

At Vicarius, we work with teams who see that continuous hardening and exposure management are essential. The AI security playbook is still emerging, but step one is clear: map your AI stack, define it as critical infrastructure, and act with intent. With layered defenses and clear oversight, we can embrace AI’s potential, without compromising control.